Me and Cursor are breaking up

⚠ This article contains strong language which is not suitable for all viewers. Additionally, this work is released under the CC0 1.0 Universal (CC0 1.0) Public Domain Dedication. To the extent possible under law, I waive all copyright and related or neighboring rights to this work. View the legal deed here.

Over the past couple months, I have been on and off of Cursor, the "latest and greatest" AI editor. The experience? Well... interesting. Today, I want to tell you the story of why Cursor stopped working for me, and why it's time for me to switch back to JetBrains. Beyond that, I want to tell you my theories about why AI is the way it is today, and what it might look like in the future.

Also, I do know that "me and Cursor" should actually be "Cursor and I," though I couldn't find a proper reason why this should be. Nobody speaks like this, and I am no professional. As much as I hate to make errors, it's just such a stupid English grammar rule. Fuck you, Collins Dictionary.

How does Cursor work financially?

I want to start out by explaining how an AI editor works behind the scenes. It won't be repetitive, I promise.

First, we have a startup with LOTS of funding. I'm talking about Anysphere, which recently raised 900 million fucking dollars for Cursor. But, what will they do with this $900m? Burn it, of course! In place of fire, though, is expensive APIs. I can say with almost 100% certainty that most of this funding will not be used to develop the editor.

See, the concept of Cursor is fantastic. For $20 a month, I knew that I was going to get 500 chances a month to have pretty good advice and edits to my code. I said to myself, "500 premium requests is good, and if I ever run out, it's unlimited at slow speeds!" You have to ask yourself where the money is coming from, though.

I can easily rack up a higher bill for Anysphere than my monthly Cursor Pro subscription will cost so easily. Hell, they've certainly lost money from me! But, that's the point. Right now, there is so much money in AI that we're getting all this access to massive models at a fraction of the price they should cost. All these companies are being fucked from the cost right now, but they're hoping and banking on the fact that we won't give up on AI.

It's a bubble, to quote one of my favorite YouTubers on AI, Pivot to AI. That bubble has to burst at some point. It doesn't mean that AI will die, but it certainly means at some point, the prices will rise astronomically.

It's not just the LLMs being trained!

While everyone is talking about the ethics of training LLMs, a lot of people are missing the other part of training! The LLMs aren't the only ones being trained. These AI companies around us are training us to use AI.

They are bringing it into our lives at a (sometimes) affordable price. They are losing a ton of money just to provide these models and tooling to us. But again, why would they do this? Have businesses stopped being the scum of the Earth? Fuck no!

Just like computers and smartphones, we are being trained to adopt them into our lives. We can't leave the house without our phones now, and I believe that's soon to come with AI. These companies beg you find ways to integrate AI into your life enough that you need a subscription. They are willing to lose money at rapid rates, build multi-billion-dollar datacenters, and do absolutely anything to put a chat interface in front of you. They want you, your friends, your family, and your employer(s) use AI so much that it will justify the real subscription price. And that price, in my opinion, will be far more than prices we have now.

My training

I onboarded with ChatGPT back in 2023 when it was still a baby and in beta. I believe DALLE was the first AI product I had ever used. I was mind-blown. I got access to GitHub Copilot later on with a free 3 month trial (back when those were a thing!). Once again, I was mind blown that it could literally read my mind while I was typing. The next line I was about to write was waiting for me already, and I only had to press the TAB key! That was back when there wasn't a "Copilot Chat" or agent yet.

When I came back from rehab in 2024, I was welcomed back to a new world of programming. Now, editors had progressed much farther with AI I was back with Copilot (now with Chat). It wouldn't be for a few months more until I discovered Cursor and ended up subscribing to their Pro plan for $20 a month. At the time, they were offering 500 fast requests (and unlimited slow requests). It seemed like a no-brainer as the limits and quality was certainly better than Copilot (I still believe it's better than Copilot).

That's when my training started. I got in a cycle of programming alongside the agent edits, and chatting with AI when I wanted to find a way to do something. Even if it was 4AM and I was tired as fuck, I could still make commits! I figured that my pre-existing programming experience would help me write quality code. If I worked alongside the Agent mode, I thought that I could safely increase my efficiency and do more with programming.

This ended up only being partially true. I rapidly developed skills and new patterns with programming which I hadn't seen before. I was able to quickly pump out new features, and make commits I was very confident in. However, I was blinded to the training and modification of myself as a programmer.

The side effects of auto-pilot editors are deadly

No, not really. But comparable. Essentially, Cursor was able to completely tear up my previous routine with programming within one fucking month of usage. I noticed that I would care so much about being perfect in every way. Now that I had the ability to learn and apply higher-level concepts to my codebases, I turned programming into a job. I was learning, sure, but not in a structured way.

It's like learning how to build a website in Next.js when you haven't learned basic HTML yet. You are only learning the changes, suggestions, and advice it feeds you, while you are unaware of other solutions to problems (many of which can be simpler). I justified it by noticing how I put lots of time into exploring other solutions (i.e. picking and sticking with an ORM and React framework), but I don't believe that was the solution either. Think about it: does auto-correct help you learn new words?

I now believe the right way to learn programming (remember: we're always learning) is in a progressive, careful, and thought-out approach. It doesn't mean doing courses. It doesn't mean using AI tools. It doesn't mean reading books. It doesn't mean LeetCode. It's somewhere in the middle of everything. But, don't listen to me just yet! You have to develop your plan for learning, which is significantly different than mine.

For me, I think the solution is 10% Stack Overflow (of course!), 10% random materials (i.e. blog posts), 20% AI, 20% YouTube, 20% documentation, and 30% talking to other developers. But the real question is: What is your strategy? How are you going to learn? What is your distribution?

At the end of the day, should we innovate?

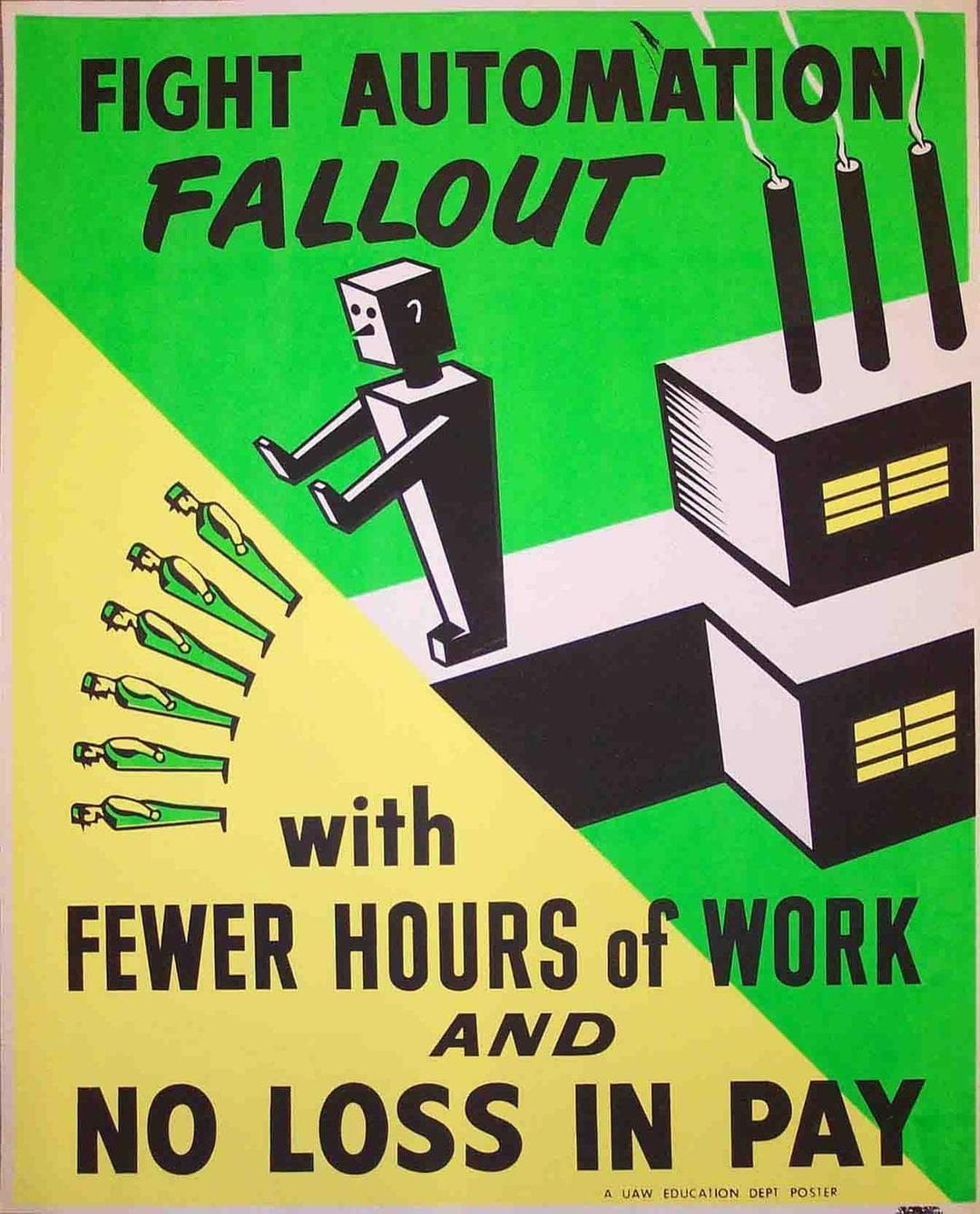

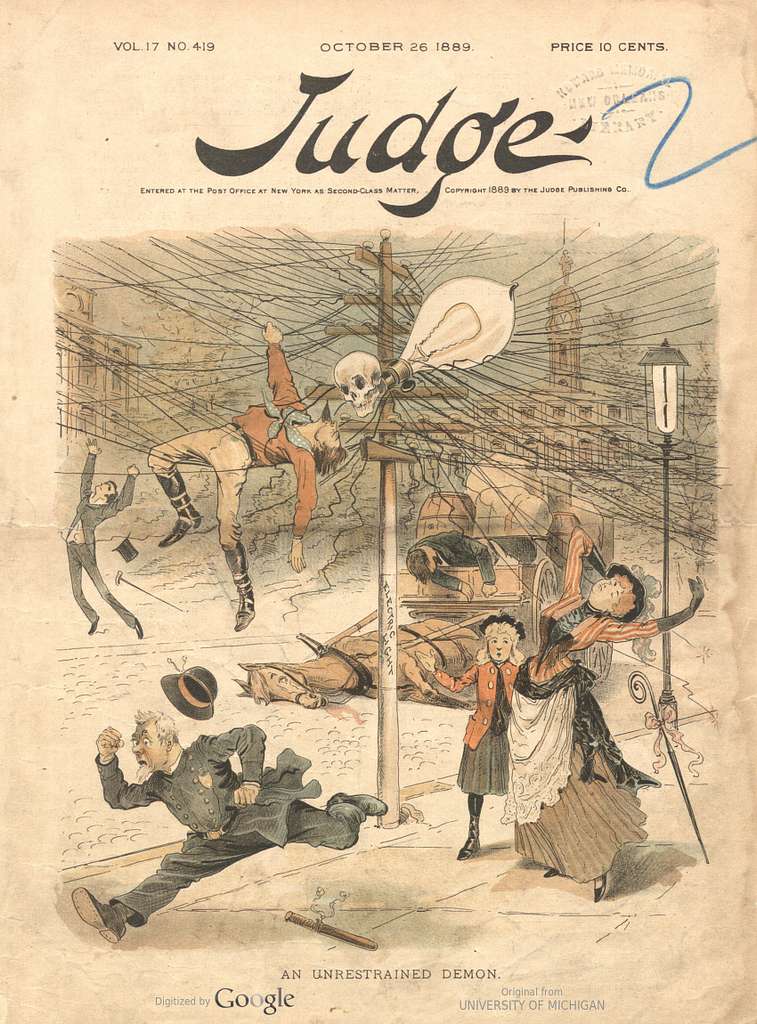

(1) A poster made by the United Auto Workers in the 1950s | (2) The cover of Judge Magazine from Oct 1889

The concept of rejecting convenience and automation isn't a new topic, either. Even electricity was rejected, and look where we are now! This doesn't mean supporters of these causes were insane, though. They accurately realized the pros and cons of automation and, just like many are doing today, advocated against it.

Now, a lot of people accept and welcome machines and electricity into our lives. We no longer have second thoughts about turning on a light. Most of us include machines as part of our life.

My point is that we are going through yet another societal shift. We have hit the next step.

We've accepted machines and electricity, but what will we do now that AI is here? Is AI wrong or right? Will it destroy the workforce and society?

While most of us can agree machines and electricity help us every day, what would the world be like if we had rejected electricity and machines? Just because we accepted it into our lives doesn't mean that it helped us as a society.

In the modern day, we are faced with yet another automation debate.

Why I'm switching back to JetBrains

Going back to my Cursor situation, I have been an on-and-off WebStorm user. It is one of my favorite IDEs, but most of all, they seem to have a clear goal (at least for now). They accept AI into their editors, though it's not central to the goal of the editor.

Cursor was simply not the solution I was seeking. In fact, there was no problem to begin with. It was an addition to a routine that already worked for me. I was just fine tabbing and typing my way to productivity.

To help explain this, I will include the current heros of both VS Code, WebStorm, and Cursor. A hero is that big text that you see when you go to a website that's supposed to catch your attention first and describe the product or service in just a few words.

Visual Studio: "You and AI. Better Together."

Visual Studio Code: "The open source AI code editor"

WebStorm: "The JavaScript and TypeScript IDE – Make development more productive and enjoyable."

Cursor: "The AI Code Editor"

The reason I am switching back to JetBrains software is simply their hero. I want to know the goal of an IDE now. In the case of JetBrains (WebStorm in particular for this), the goal of the editor they are communicating to me is that I will be more productive and have a better experience while programming.

They are not telling me that I need AI in order to get this level of productivity. They aren't telling me that I need AI to have a good time while programming. They are not centering the editor around AI. In fact, there was significantly more content about the editor than it's AI features on the entire page. This is quite rare to find now! And... can you believe this? I had to scroll down to even see that it had AI features.

While I'm looking for an editor with a solid AI integration, I do not want it to be a central part of programming.

Got it. Now what?

I didn't want to write this article to tell you whether you should or shouldn't use an AI editor. I simply don't have an answer, and I can tell you for certain that nobody else does either. It's a personal choice.

I believe the positive and negative impacts of AI are individual. I didn't lose my problem solving skills from using AI, but I lost my style of learning. You might not face challenges with either, and have your own unique set of pros and cons. Sure, we can make general guesses as to how it will change society, but you don't know until you try.

So, I think the answer to this question lies in how you make decisions. Knowing the benefits and risks, what will you do? And don't feel any pressure from me, because I feel like both options are great choices. For me? I think an AI-unfocused editor is right for me, but there are people who do great work with Cursor and other AI editors.

Take my words as a user who had a poor experience. Then, find someone who's a Cursor vibe coding master (just go on Twitter, haha), and listen to their words. Do you resonate better with my bad experience, or the other developer who's had a good experience with it?

At the end of the day, it should never be someone else who dictates your style of programming. Does that style include AI? Is it 110% AI-free? I am confident that you will make the right decision, knowing yourself. And if it wasn't the right choice, you can always adapt and change your situation for the better.